AI Shifts Power

Scientists and academics don’t like to make predictions about the future, especially not about one filled with so many unknowns such as the future evolution of artificial intelligence (AI) and its impact on work and our society. Historian Matthieu Leimgruber and philosopher Friedemann Bieber nonetheless have given thought to our AI future. They speak out below on three questions: will AI soon render us jobless? Can AI think? And who controls the processes employed to further develop AI?

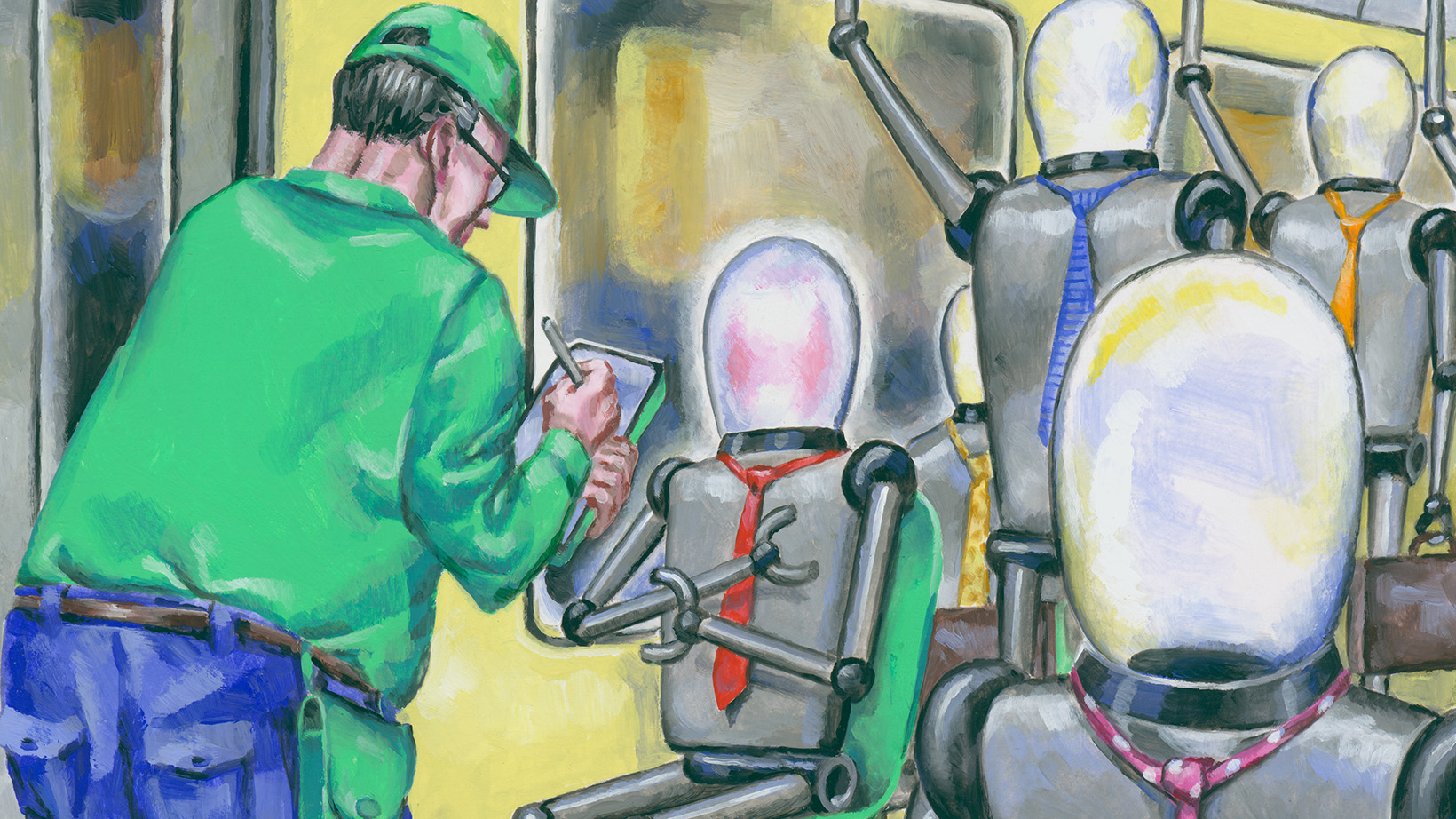

1. Will AI soon render us jobless?

Matthieu Leimgruber: There’s lots of speculation that AI will take jobs away from humans, but we haven’t seen that happening thus far. People are still doing an awful lot of work – only marginally less than 50 years ago – despite all of the technological innovations. So, I consider large-scale unemployment due to AI unlikely. Our work will change, but it won’t disappear.

Can you give an example?

Leimgruber: Take secretaries, for instance. They used to do a lot of repetitive work, such as copying letters or typing dictation. Nobody does that anymore these days. Secretaries nonetheless still exist; they just do more demanding tasks now.

Friedemann Bieber: Today even the best AI programs are not yet capable of doing certain things on their own because they don’t have the requisite cognitive abilities or lack physical experience in the world. If that changes one day, humans will be faced with the question of what’s left for them to do.

What would be left for us to do?

Bieber: Forms of labor such as “emotional work” or “care work”, which potentially cannot be substituted by AI. Emotional work, for example, would be listening to and being responsive to other people’s problems. Care work, for instance, is caring for the sick or raising children. There likely will be other areas as well where we do not want to dispense with humans, such as in jurisprudence and political governance.

What will we do with our time if AI takes over a large part of our work?

Bieber: Then we would have leisure for other things that interest us instead of having to work a job just to earn money. That would be the good scenario. But if we ever get to a point where machines can do practically everything better than people can, that arguably would plunge humanity into an existential crisis because it is important to us to be able to contribute something to society.

AI is hardworking and indefatigable. It will probably make some people very wealthy.

Bieber: Yes, AI may greatly increase prosperity. We might enter a world of superabundance. The question, though, will be how that wealth gets distributed because the owners of the intelligent machines will mainly be the ones that profit from the increased wealth at first.

Leimgruber: AI not only makes the economy more productive, but also involves huge costs and consumes enormous amounts of energy. That compounds our environmental problems. The question we have to ask ourselves is: Do we want economic growth at any price, even at the price of destroying the Earth. If not, then the energy available to us will impose limits on the deployment of AI, just like it will on other energy-intensive processes like the mining of bitcoins, for instance.

The current misgivings resemble those that were expressed back in the mid-20th century about the hazards and risks of nuclear fission.

2. Can AI think?

Leimgruber: No, AI doesn’t think. AI can summarize and reproduce what already exists and can do it very quickly. That looks like magic, but it isn’t comparable to the critical thinking that humans are capable of. That’s why AI sometimes produces completely nonsensical things without realizing it, simply because AI doesn’t have consciousness like humans do. I constantly try to hammer that home also to my students.

Bieber: It depends on how we define “thinking.” To me at least, it does not seem evident that machines can’t think. If an AI program, for example, can explain jokes or recognize analogies, isn’t it thinking in that case, at least in a certain sense? There’s this theory that AI is a “stochastic parrot” that merely mimics things. But AI can do more than that. The training of AI creates an optimized representation of the world. Under that specific framework, AI then is even capable of drawing reasoned conclusions, not always but oftentimes successfully.

There are two scenarios for the future evolution of AI. The conservative one posits that AI will reach the cognitive boundary that separates reactive and reflective thinking and will be unable to cross it. In that case, in the future we will work together with AI in a variety of forms in which humans do the governing and control the processes. What will happen, though, if AI breaks through that boundary?

Bieber: It could come to what British mathematician I.J. Good called an “intelligence explosion”. That would be the moment when intelligent systems gain the ability to improve themselves and perhaps even to further develop themselves recursively with no foreseeable end point. Humans really could get left behind in that case.

Do we really want in the first place to develop machines that are cognitively superior to us in all areas?

3. Who controls the processes employed to further develop AI?

If the aforementioned intelligence explosion really happens and AI is capable of further evolving on its own, that raises the question of if we are still able to control AI or if artificial intelligence will take over power, a future prospect that even AI developers warn is entirely possible. How do you see it?

Leimgruber: Humanity still holds the reins at the moment. It isn’t clear yet how that will evolve in the future.

Bieber: Even if we assume that AI won’t take over power itself, it nonetheless can endanger our society because the people who own and control the machines possess an awful lot of clout that enables them to impact society. They could manipulate and undermine democratic structures.

A vision of how that might look is conveyed today by Big Tech companies like Facebook and Google, which frequently act very much on their own authority. A new chapter has been opened in this regard by X owner Elon Musk, who is using his platform to campaign for the election of Donald Trump. Must we fear an AI future? And what can we do to prevent disagreeable and potentially dangerous scenarios from becoming reality?

Bieber: I think the future is radically uncertain in this area. Many predictions about the evolution of AI have turned out to be much too optimistic. In 1956, for example, there was a conference on AI at Dartmouth College that is now legendary: researchers at that time thought that in a span of a few weeks they could achieve breakthroughs in making machines capable of improving themselves. Today the progress feels very palpable and fast-paced, but perhaps the current research paradigm will soon reach its limits. We nevertheless should take the evolution of AI seriously.

Why?

Bieber: AI is already starting to shift power relations. In totalitarian countries, for instance, AI enables almost airtight surveillance of all citizens. The centralization of control in state or private hands is a danger that we can counter through strict regulation and vigilance. More generally, in addition we should push for international regulations on research – particularly on research on state-of-the-art AI models – with external controls and clear accountability and liability rules. We could use rules governing work with viruses as a blueprint here, for example. And overarchingly we should all ask ourselves if we really want in the first place to develop machines that are cognitively superior to us in all areas. A few thousand researchers and venture capitalists are driving their development at present. But if we lend them credence that there’s a real chance that they will be successful, then it seems obvious to me that this decision must be made democratically by all of humanity.

Leimgruber: In 2023, leading scientists, academics and entrepreneurs, including Elon Musk, signed an open letter proposing a pause in the development of large language models to allow our societies to think about steering and even regulating AI advancements. The current misgivings resemble those that were expressed back in the mid-20th century about the hazards and risks of nuclear fission. Today, like then, a revolutionary technology is giving rise to big hopes and fears. The future of AI thus remains an open question, one that we will be grappling with for a long time to come in the fashion of the Homeric debates about the energy and nuclear arms questions.

This article is from the dossier ‘With brains and AI’ from the UZH Magazine 3/2024